I spent a good deal of time right before break trying to figure out exactly how far I can push my students for the second semester of Algebra 1. These skill items will be broken down into chunks for the skills tests, and MC-ized for the benchmarks and final exam. I regret how many concepts I had to leave out due to time pressures; and still, the list seems daunting and endless.

If you're interested, this is what my students will be doing over the coming months.

doc / pdf

Monday, January 04, 2010

Algebra 1: Skills List - Spring Semester

Posted by

Dan Wekselgreene

9

comments

![]()

Labels: algebra 1, assessment

Tuesday, October 27, 2009

Putting students in control of their learning

In the last couple of years, I've worked to really clarify exactly what skills I expect my students to learn. The assessment system makes it crystal clear what skills students know and don't know. And then I realized: Oh wait - it's only crystal clear to me. Students focus on their test scores, and come in to retake and improve tests, but they really don't think about what mathematical content they need to develop - only what test number they need to retake. I still have a few students who insist on retaking skills tests even though they haven't done any work to learn the skills that they got wrong the first time. Even when this fails to produce the results they want, they still resist actually working with me to learn the skill.

I think that helping students really understand what the individual skills consist of, and what their personal ability level is on each skill, is really the next step. I want students to understand the connection between their level of numeracy and their success in mastering algebraic concepts. I also want students to make connections between their behaviors in class and their growth (or lack of growth) in the lesson's objectives. Finally, I want to provide students with greater differentiation so that all students can both feel challenged and successful.

So, I put all of that together into a new plan for beginning and ending class. Students will start class with a 10 minute Do Now that has three parts. Part 1 is a Numeracy Skill Builder that targets a specific elementary math concept that is either key to the specific lesson, or something that students have been struggling with. Part 2 consists of one or two algebra concepts that are the lesson objectives. These are broken into basic, proficient, and advanced levels. The proficient level is the form in which the concept will be tested on a skills test. Students are told to solve only one problem in each concept, at the level they feel most comfortable at. Part 3 is a multiple choice test prep question. The purpose of this is obvious, as we need to get students ready for state tests, ACTs, placement tests, and so on.

Students have 10 minutes to complete these problems individually and silently. No helping is permitted here (in general), because the purpose is for students to really get a sense of what they know at the beginning of class on their own. At the end of the 10 minutes, I show the answers so students can see how they did, but we don't spend time actually reviewing these specific problems. I quickly collect the papers.

We have the lesson. Ok.

Now, in the last 5 - 7 minutes, I hand back the papers. On the back, students complete the Exit Slip / Reflection. They are supposed to go back to the Do Now problems, pick one algebra concept, and try a higher level problem. The idea is for them to see how much they can improve in an objective over the course of the class period. So, even if they are only able to accomplish the basic level (when they couldn't before), they can see growth in themselves and feel good about that. Students who already could do the advanced concepts at the beginning of the class have a shot at doing a harder challenge problem, so that they too can push their thinking (my advanced students really like this).

I just started doing this today, so I don't have too much to report about it yet. It seems to have gone well, though it took longer than the 10 minutes because I needed to explain the process a few times until they all got what I was talking about. As it becomes part of the routine, I'll know more about what impact it is really having.

Here is the first one we did, in pdf and word formats.

I'd love to get any feedback on any part of this.

Edit

We decided to make the reflection portion into a progress tracker, instead of copying it individually on the back of each Do Now. This log will be kept in a binder in the class. This will allow students to see how they did in previous classes as they are filling out the current reflection. It will also be a very useful document for discussions during grade conferences.

Posted by

Dan Wekselgreene

8

comments

![]()

Labels: algebra 1, assessment, classroom structure, differentiation

Monday, September 07, 2009

Algebra 1: Skills List

My goal for this weekend was to complete a rough draft of all the skill items that will be assessed on the first semester final exam. These items are assessed in chunks on the weekly skills tests, and in larger chunks on the 6-week benchmark exams. After each benchmark exam, the plan is to spend a lesson or two on targeted reteaching - any ideas that people have on how to make this effective would be very much appreciated.

I've finished the list, and am interested to hear what other Algebra 1 teachers think about the scope and detail of the items. What would you add? Take away?

Posted by

Dan Wekselgreene

9

comments

![]()

Labels: algebra 1, assessment, cumulative exams

Saturday, January 17, 2009

Do skills tests work?

As I've written about before, a large part of the students' grades this year in Algebra 2 are based off of the skills tests. The method I'm using is based of off Dan's, but I've modified it quite a bit. I'll save reflecting on the details of the method, and what should be kept/changed for the end of the year. I'm still getting a feel for the process, and what I've been doing has worked well enough that I don't want to significantly alter it until next year.

The crux of the method is that students are primarily assessed on smaller bits of information, more frequently. They are also encouraged to try and try again at the same concepts until they master them. Since students learn at different rates, and have different things going on in their lives that may prevent them from learning at a certain point in time, they can relearn and retake the skills tests whenever they want, before the end of the semester.

Instead of assessing each skill individually, I've been grouping them into clusters of 4 or 5 related skills. If a student gets, for example, the first 3 out of 5 correct, the score is 3/5. If they retake it, and get the last 4 right (but miss the first this time), I'll raise the score to 4/5, not 5/5 - even though the first one was "mastered" the first time around. This promotes lots of retaking, which is what I want, since my students really need to practice and practice in order to retain concepts.

It took students a while to understand how this system works, but as they figure it out, they love it, because it gives them a chance to really improve their grade when they fall behind. I've had a handful of students bring their grades up from Fs to Cs or Bs just in the last two to three weeks before finals, where this never would have been possible before.

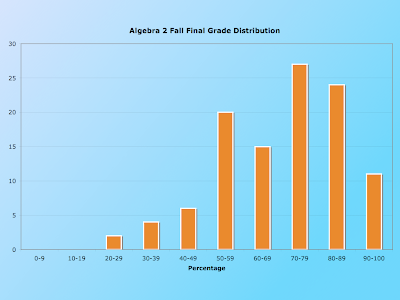

My big fear, of course, is that this style of "micro-testing" would lead to artificially high grades, and that students' retention of material would not pan out. I've been eagerly anticipating the results of the final exam to get some relevant data. The final consisted of 50 questions that were compiled from the skills tests, though of course with different values. First off, here is the distribution of grades on the final exam:

Though this may not look like something to cheer about, for a DCP final exam, this is actually quite good. The average score was a 70 and the median was a 72. But, I was more interested in thinking about the relationship between students' skills test percent and the final exam percent. If the system works as it is meant to, the skills test score should strongly predict the final exam score. The next graph shows a scatterplot of this relationship.

The purple dotted line shows what a y = x relationship would look like, and clearly (as I expected) there are more dots below the line than above - indicating students who performed better on the skills tests than on the final. But how much of a difference was there? I added in the best-fit line, and though it deviates from the purple line, it actually strikes me as not that bad. It's clear that all but a handful of students who failed the skills tests (i.e. didn't do well the first time, and didn't bother retaking them) also failed the final exam. While these students concern me greatly in terms of the task we have in motivating and educating our target students, they actually support the idea that the skills test scores are predictive of the final exam score.

The section of most concern to me is that in the red box. These are the students who had a passing score on the skills tests, but failed the final exam. Are there enough students in that section to show that the system doesn't work? I'm not really sure. Of the students who passed the skills tests, many more of them passed the final exam than did not, and I find this encouraging. And, the 24 dots in the red box all did better than 50% on the final, which means they didn't have catastrophic failure (which is not that uncommon on our final exams). But, they didn't show what we typically consider "adequate" retention, since they didn't get at least 70% of the questions right.

I'm posting this because I would like feedback and impressions from other teachers. What does the data say to you? And for those of you using a concept quiz/skills test method, what kinds of results are you seeing?

Posted by

Dan Wekselgreene

3

comments

![]()

Labels: algebra 2, assessment, cumulative exams